Understanding Audio & Video Deepfakes

CEO fake & more: Implications for Companies

Let’s start with audio! Audio deepfakes are created using artificial intelligence and machine learning algorithms to clone human voices. With as little as 30 seconds of audio from the target, these algorithms can generate convincing fake audio clips. The person on the other end of the line—be it a CEO, a grandparent, or a public figure—sounds exactly as they would in real life, making the fake almost indistinguishable from reality.

Audio Tools such as

are responsible AI tools – which can be misused as every other tool. Plus, offers from within the darknet also provide audiofaking services.

For businesses, the threat is twofold. First, there’s the risk of fraud and deception. Imagine a scenario where an employee receives a call from someone impersonating their boss, instructing them to transfer funds or share confidential information. The employee, believing the request to be legitimate, complies, leading to financial loss or data breaches.

Second, the reputation of a company can be severely damaged if deepfakes are used to spread false information. A fake audio clip of a CEO making derogatory remarks or admitting to illegal activities, even if quickly debunked, can cause lasting harm to a company’s image.

The same is true for videofakes such seen in recent weeks: the Pope or Taylor Swift have become victims of deepfakes.

Grandson trick and similars: Risks to Citizens

The dangers extend beyond the corporate world. For individuals, particularly seniors, the emotional and financial consequences can be devastating. Scammers using audio deepfakes can impersonate family members in distress, convincingly asking for urgent financial assistance. The infamous grandson trick, where an alleged grandchild calls a senior to ask for a money transfer, comes to mind. These scams prey on the emotional vulnerability of individuals, leading to loss of funds and emotional trauma.

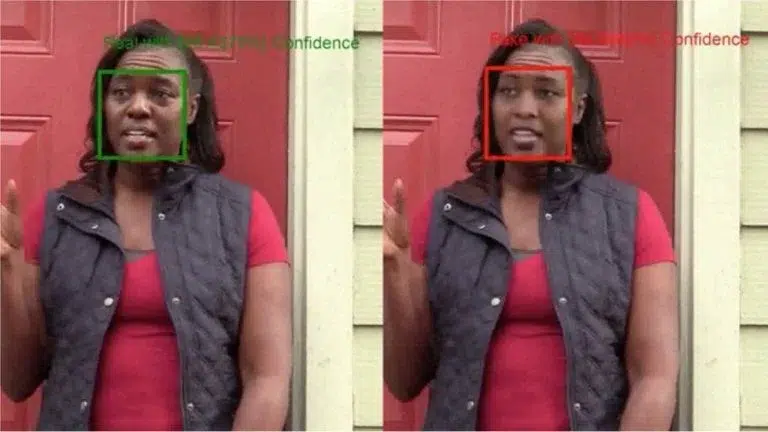

The Challenge of Detection

Detecting and combating audio deepfakes is a significant challenge. While technology to identify deepfakes is evolving, the pace at which deepfake technology itself is advancing means that detection methods often lag behind. This ongoing cat-and-mouse game complicates efforts to protect against the misuse of audio deepfakes.

Solution: Technology and awareness

Addressing the threat posed by audio deepfakes requires a multi-faceted approach.

Education and awareness are key: companies and individuals must be made aware of the existence of this technology and the forms of deception it can enable.

On the technological front, continued investment in detection methods is crucial. Regulatory measures may also play a role, with potential for laws aimed at penalizing the malicious use of deepfakes.

AI software tools you can use to unvover audio fakes are, for example:

In addition, businesses should implement stringent verification processes for transactions and sensitive actions, such as multi-factor authentication and verbal confirmation through known and secure channels.

Video Deepfake Detection

And while we are at it, let’s look at tools that detect video deepfakes.

- Our favourite: Deepware offers a free scanner tool where you can paste a link to check for deepfake content.

- Reality Defender supplies software to detect AI generared images, voices, videos and texts.

- Sentinel is at the forefront of detection services.

- The company BioID offers deepfake detection services.

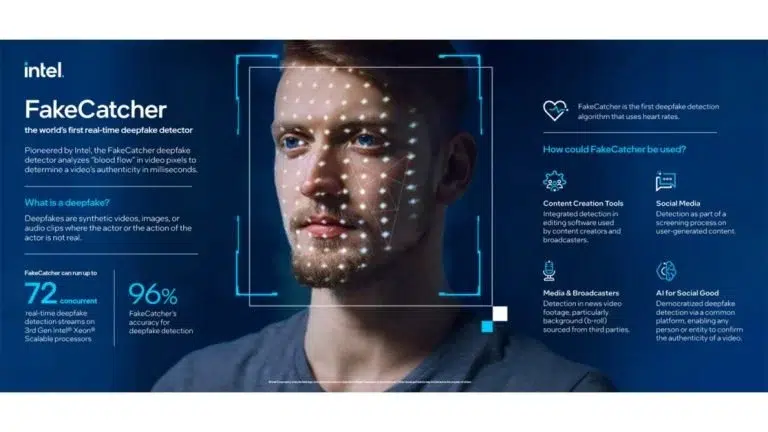

- Already in 2022, Intel has introduced a Real-Time Deepfake Detector.

- Other approaches such as WeVerify use participatory verification approach and a brwoser PlugIn for Chrome (at the moment) to find fraud.

- Since 2020, Microsoft is partnering with Sensity to detect AI-manipulated content. Since then, Microsoft has been using their own Video Authenticator Tool against misinformation.

Also try the quiz of Northwestern University and see if you detect deepfakes and check out the university’s informational website with lots of links on deepfake detection.

AI Deepfakes: Our summary

The rise of deepfakes is a testament to the remarkable capabilities of modern AI, but it also highlights the dark side of technological advancement. As companies and citizens grapple with the implications of this emerging threat, the collective response will shape the future. Every individual can contribute to exposing deepfakes by making colleagues and fellow citizens aware of the danger of deepfakes.

Want to know more?