When will it take effect?

The regulation will come into effect 20 days after its publication in the Official Journal of the EU and will be fully applicable 24 months after its entry into force. Exceptions apply to

- AI with an unacceptable high risk (6 months after entry into force)

- codes of conduct (9 months)

- rules for general-purpose AI (12 months)

- and obligations for high-risk AI (36 months after entry into force).

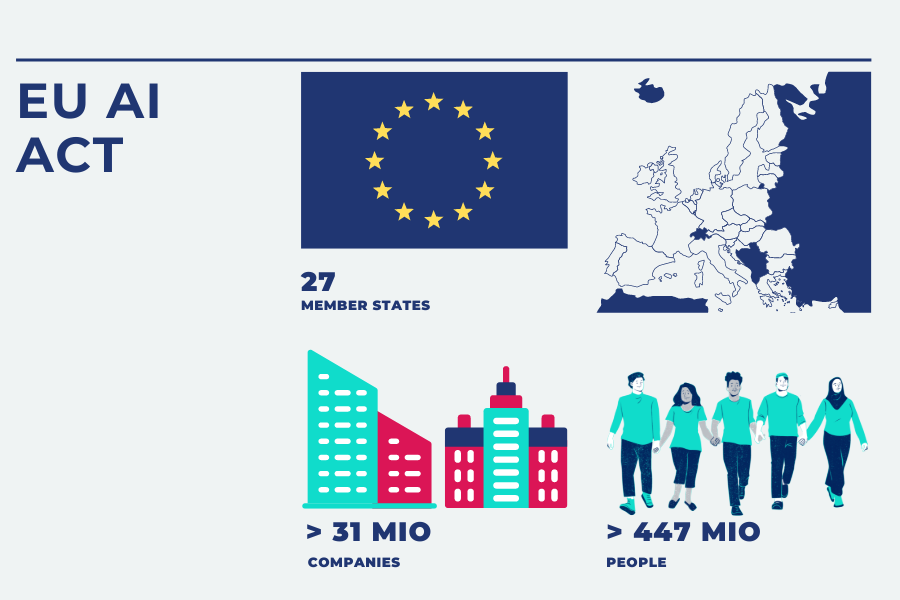

Who is affected by the EU AI Act?

This is specified in Article 2 of the draft. Accordingly, the regulations apply to:

- Providers who put AI systems into service or operate them in the EU. This includes all legal entities, authorities, institutions, or other bodies that develop or have AI systems developed.

- Users of AI systems located within the Union. This also includes natural persons, thus also private individuals.

Therefore, the new law affects two main groups.

- First, it concerns companies and authorities in the EU that offer or use AI.

- Second, it affects people in the EU, as nearly everyone comes into contact with AI systems nowadays, whether through social media, online shopping, or navigation systems.

These groups must comply with the rules of the AI Act, no matter where they are located, as long as their AI systems are deployed in the EU or have effects within the EU. An example is an American tech company whose AI product is used in Europe.

However, the AI Act only protects individuals within the EU. EU-based companies could theoretically deploy AI systems, banned under the AI Act, outside the EU.

What falls under the term "Artificial Intelligence"?

The legislature understands artificial intelligence (AI) as a broad term that encompasses various techniques listed in Annex I of the law. This includes software that uses:

- Methods of machine learning and deep learning

- Logic-based and knowledge-based approaches such as knowledge representation, inductive logic programming, inference systems, deduction machines, and reasoning and expert systems

- Statistical methods, including estimation methods as well as search and optimization techniques

Additionally, the software must:

- Pursue human-defined objectives

- Make predictions, recommendations, or decisions about outcomes or content and

- Influence the environment it interacts with.

What business sectors are affected by the EU AI Act?

This includes, for example, social scoring, which could be used in recruitment/human resources, credit ratings, and education. Given the above definition of AI, numerous IT companies would also fall under the scope of the new regulations.

The EU AI Act sets standards for AI products in risk areas

The AI Act classifies and regulates AI systems according to their risk. Systems posing an unacceptable risk are banned. High-risk systems must meet strict requirements, while low-risk systems are subject to no or minimal regulations.

The EU AI Act classifies into four risk levels:

- Unacceptable risk: AI applications that assess human behavior, influence people, or exploit their vulnerabilities, leading to disadvantages or endangerment. Such technologies are banned, except in cases for law enforcement.

- High risk: Technologies not directly banned but pose serious risks to fundamental rights and the safety and health of individuals. Their use is regulated by strict requirements in the AI Act.

- Medium risk: AI systems that interact with humans must inform them about their use (known as “transparency and information obligations”).

- Low risk: Companies using low-risk AI systems not specifically regulated by the AI Act are recommended voluntary codes of conduct.

What are the implications for companies?

The AI Act has significant consequences for European companies and their users. There are numerous administrative obligations, including:

- efficient risk management

- ensuring quality

- providing (technical) documentation

- proactive communication

Ongoing monitoring and timely response in operations are also essential. Furthermore, the EU aims to support innovation through test labs operated by national authorities. These labs will develop and test AI before it is introduced.

The practical technical implementation, which the AI Act does not directly address, will also be a challenge and could involve significant costs. The vague requirements leave some questions open and can create uncertainties due to potential sanctions. At the same time, the general formulations give companies a certain degree of flexibility for their own design possibilities.

Next steps for companies

- Are you already using AI or planning to do so?

- If yes: Is there an overview of the AI systems used in the company?

- Is there a strategy for using AI?

- Is there an internal policy for controlling AI that aligns with the guidelines of the EU AI Act? Have you appointed a special team for AI governance (“AI Governance”)?

- What function is assigned to AI systems in the company?

- Additionally, all companies should train their employees on AI (“AI Literacy”).

If you have questions about using AI in marketing, our experts look forward to assisting you.